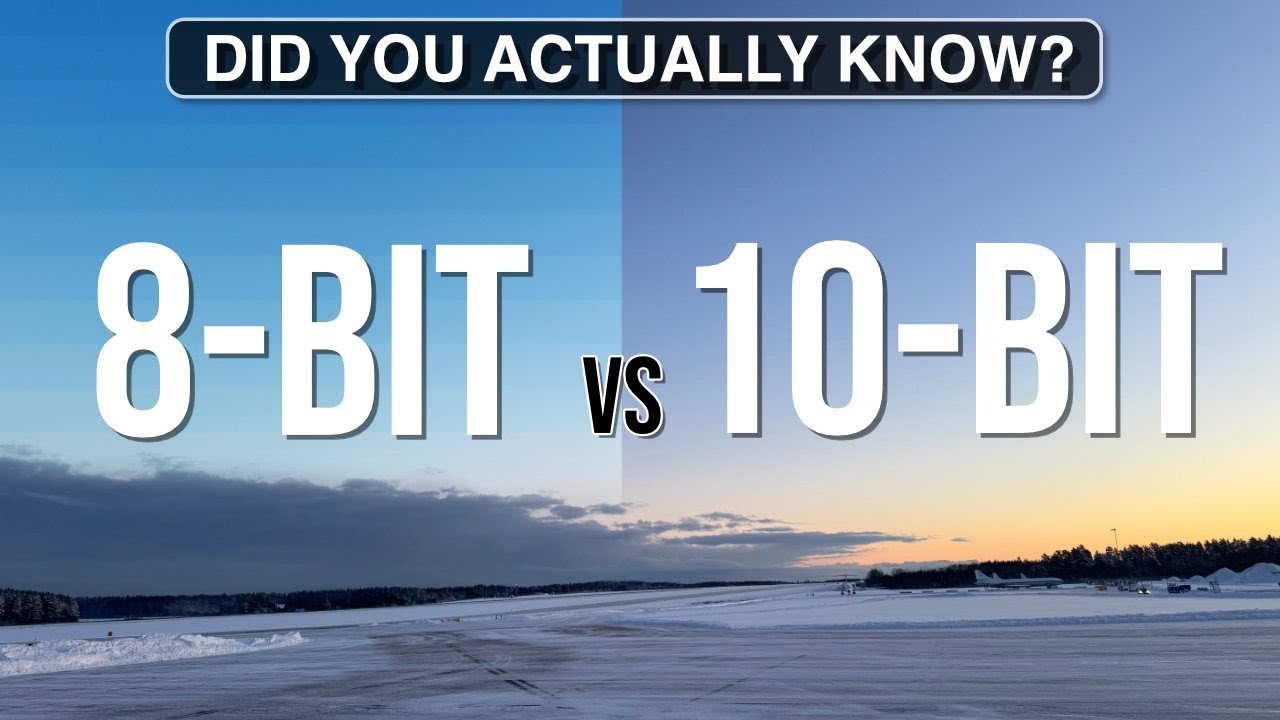

8 Bit Vs 10 Bit

8 Bit Vs 10 Bit - By upgrading to 10 bit hdr, and watching 10 bit hdr content, you'll be sure to enjoy every scene in. 10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but. So its like this, i have an asus pg27uq monitor. No it won't increase the visual quality much simply because it can display 10 but colour. If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz or higher). It is known that some panels that receive a 12 bit. If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k.

If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. By upgrading to 10 bit hdr, and watching 10 bit hdr content, you'll be sure to enjoy every scene in. I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz or higher). If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k. 10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but. No it won't increase the visual quality much simply because it can display 10 but colour. It is known that some panels that receive a 12 bit. So its like this, i have an asus pg27uq monitor.

I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz or higher). If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k. By upgrading to 10 bit hdr, and watching 10 bit hdr content, you'll be sure to enjoy every scene in. It is known that some panels that receive a 12 bit. So its like this, i have an asus pg27uq monitor. No it won't increase the visual quality much simply because it can display 10 but colour. If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. 10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but.

Hölle einheimisch benachbart 8 bit vs 10 bit monitor gaming Reim Bereit

It is known that some panels that receive a 12 bit. If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k. 10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but. If you're.

The difference between 8bit and 10bit videography? MPB

If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k. 10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but. So its like this, i have an asus pg27uq monitor. If you're running.

💥 BIT DEPTH o PROFUNDIDAD DE COLOR 💥 ¿Que son 8 bits / 10 bits / 12

So its like this, i have an asus pg27uq monitor. No it won't increase the visual quality much simply because it can display 10 but colour. I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz.

True 10bit vs 8bit + FRC Monitor What is the difference? Isolapse

I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz or higher). 10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but..

Archimago's Musings QUICK COMPARE AVC vs. HEVC, 8bit vs. 10bit

If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. It is known that some panels that receive a 12 bit. So its like this, i have an asus pg27uq monitor. If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for.

10bit vs 8bit Color for Gaming Which One to Pick?

If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k. By upgrading to 10 bit hdr, and watching 10 bit hdr content, you'll be sure to enjoy every scene in. So its like this, i have an asus pg27uq monitor. 10 bit colour display have (generally speaking).

8bit versus 10bit screen colours. What is the big deal? (AV guide

I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz or higher). If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. No it won't increase.

8 bit vs 10 bit video Explained EASY to understand. YouTube

So its like this, i have an asus pg27uq monitor. If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. No it won't increase the visual quality much simply because it can display 10 but colour. If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and.

8 Bit vs.10 Bit Video What's the Difference?

If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option. No it won't increase the visual quality much simply because it can display 10 but colour. I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or.

8bit versus 10bit screen colours. What is the big deal? (AV guide

10 bit colour display have (generally speaking) better looking colour display, so it might for example display deeper blacks or more virbrant colours but. I noticed that when i tinker around with the hz levels on the nvidia driver it changes from 10 bit (when on 98hz or lower) to 8 bit + dithering (when on 120 hz or higher)..

I Noticed That When I Tinker Around With The Hz Levels On The Nvidia Driver It Changes From 10 Bit (When On 98Hz Or Lower) To 8 Bit + Dithering (When On 120 Hz Or Higher).

By upgrading to 10 bit hdr, and watching 10 bit hdr content, you'll be sure to enjoy every scene in. So its like this, i have an asus pg27uq monitor. If you're limited to hdmi 2.0 bandwidth, then 4:2:2 10 bit for hdr and full rgb 8 bit for sdr, both @ 4k. It is known that some panels that receive a 12 bit.

10 Bit Colour Display Have (Generally Speaking) Better Looking Colour Display, So It Might For Example Display Deeper Blacks Or More Virbrant Colours But.

No it won't increase the visual quality much simply because it can display 10 but colour. If you're running hdmi 2.1 then rgb 10 bit @ 4k for either sdr or hdr is your best option.